While chatting with some MVP friends of mine about a specific scenario where data from e-mails needed to be read and monitored, there are multiple possibilities to do it. I proposed one possibility which I implemented at a customer a while ago and got asked to blog about the solution, so here it is. Because SCOM is not built to natively read from a mailbox, one has to come up with a workaround, and in my case I used System Center Orchestrator to do part of the job.

Challenge:

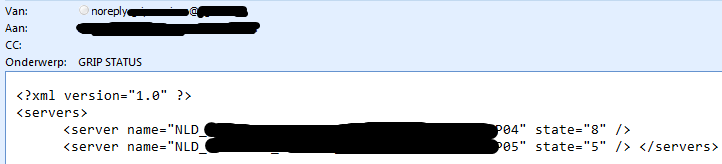

Following is the situation. A number of servers monitored by another company and using another monitoring product. That product monitors servers from several customers of theirs, so we can not directly access it. We could not access or query the product directly either through scripts or commands or database queries. So in the end the result was that the other company would send e-mails from their several monitoring systems to one of our mailboxes. Resulting in 3 e-mails every 15 minutes. The e-mails contained an XML formatted body containing a list of servers and their state.

- So, we have to read 3 e-mails from a mailbox every 15 minutes. Pull out the body of the e-mails. Next merge the content to make it 1 XML file placed on a server with a SCOM agent on it. These steps are not native to SCOM, but a combination or Orchestrator and PowerShell

- After that we can use one of several methods to monitor a text based file on a server to create the monitoring part. For this we can use SCOM.

SO let us start with the first part

Using Orchestrator to get our e-mails into an XML file

I bet there are also other methods of doing this, but this was the method I selected and due to Orchestrator having some flexibility and some built-in actions in the intelligence packs this is very versatile.

Let us check out the email for a second:

We see the XML body there. In this case there are two servers mentioned in the email, however with longer names than how we know them so we need to play around with that too. Also with XML there is a header (first line) and a wrapper (second line start and end of last line), with the two actual content lines in the middle of it. Notice there are carriage returns and also spaces and potential tabs in there, which make it “nice” to filter those out while pulling the XML apart and creating a new XML file from that!

Ingredients needed:

- A destination File share where the final XML file will be placed for being monitored.

- A mailbox where those messages arrive and we can read them from

- We created an automatic rule to place those e-mails in a specific named folder in the mailbox.

- We created a second folder where we can move the already read messages to.

- An account able to read in that mailbox.

- Orchestrator to create a runbook and bring it all together.

- An intelligence pack for Orchestrator which can read from a mailbox. I used the “SCORCH Dev – Exchange Email” IP for this which can be found at https://scorch.codeplex.com/

First import the Orchestrator IP needed to read the email and distribute it to the runbook servers as usual. Next start a fresh runbook and name it appropriately and place it in a folder where you can actually find it within Orchestrator. Advice is to use a clear folder structure within Orchestrator to place your runbooks in. This is not for the benefit of Orchestrator, but for yours!

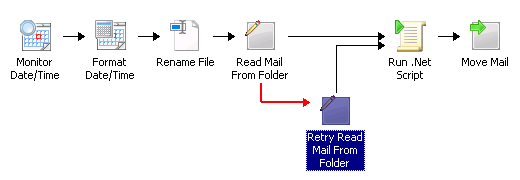

Now we create the runbook. I will put the picture of the finished runbook here first before going through the activities:

Let’s now cut up the pieces:

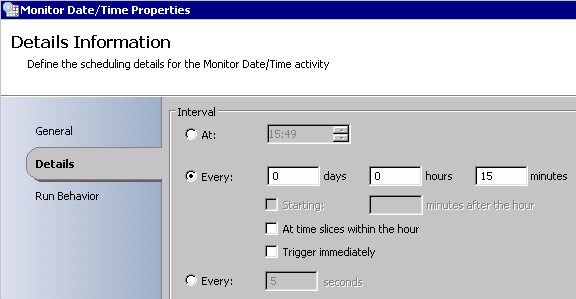

Monitor Date/Time

Well this one simply says to check every 15 minutes

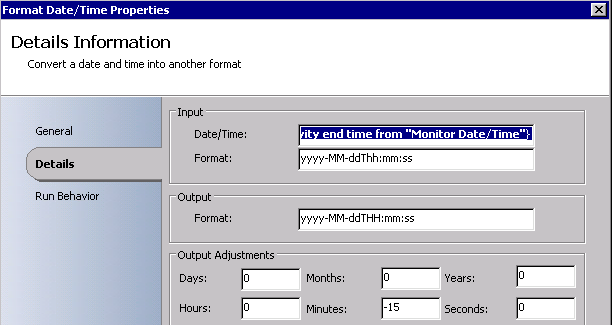

Format Date/Time

This one takes the current time from the first activity and at the bottom there subtracts 15 minutes from it. The story behind this is that we want to read all emails which came in between now and 15 minutes ago. So this gives us that point in time.

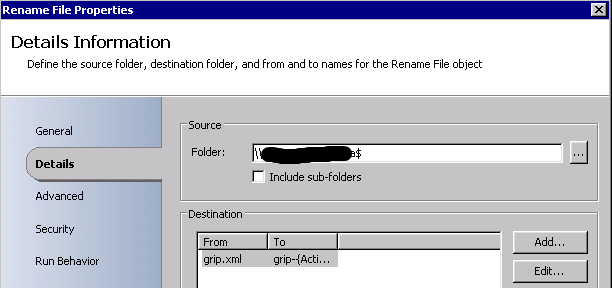

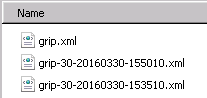

Rename File

We wanted our monitored xml file to always have a fixed name. So when we are about to create a new version of that file we first go out to that file share and take the current XML file and rename it by adding a date-time format in the name to make it unique. We wanted to be able to look back in history here, else we would have chosen to just delete it. This makes the folder look like this:

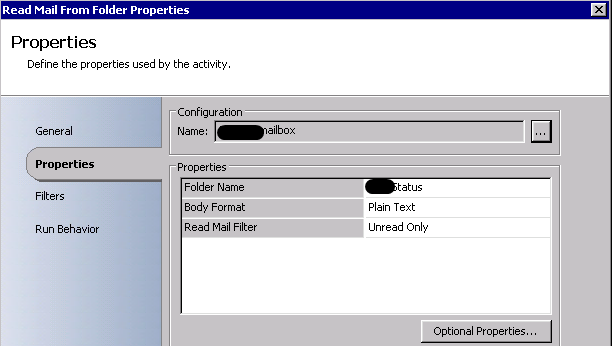

Read mail from folder

Now this is a custom activity coming from the Exchange Email IP we imported earlier.

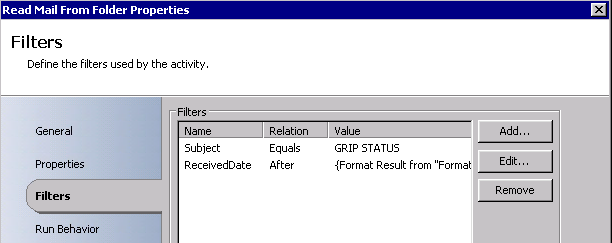

From the top we see we have to define a configuration. We will get back to that in a second. Next you can see that we are looking for Unread emails in a certain folder (keep in mind folder name must be unique in that mailbox else it just takes the other one, which you did not want to). Now on the left hand side we see Filters:

We also want those emails to have a certain subject line. And we want those emails to be received after the time from the Format Date/Time activity above. Meaning the email was received after 15 minutes ago. So in the last 15 minutes.

Now to get back to the Configuration part. Many IP’s in Orchestrator have a place where you can centrally set some parameters. For instance a login account, a server connection, and so on. This can be found on the top menu bar of the Orchestrator Runbook Designer under the Options menu. Find the item with the same name as the IP you are trying to configure. In this case it needs us to setup a connection to an email server. Type is Exchange Server, type a username, password, domain, and a ServiceURL. For an exchange server this could be https://webmail.domain.com/EWS/Exchange.asmx for example, but check this for your own environment.

Retry Read mail from folder

This one will only run if the first read mail from folder activity fails. You can set properties on those connecting arrows between the activities to make it go here it the first one fails. I made the line color red and set a delay on the line of 20 seconds. Else it will follow the other line and go to the script. This activity does exactly the same as the previous one. We had some time-outs during certain times so this extra loop slipped in there.

So those Read mail from folder activities should contain 3 e-mails received in the last 15 minutes from that folder, unread, with a subject line, and Orchestrator now knows what the body of those emails contains. This also means that the next activity (the script) will run three times.

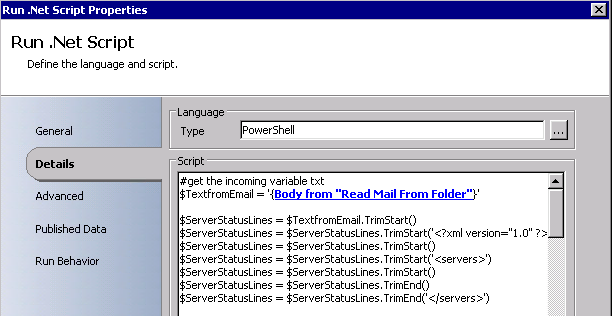

Run .net script

At the top we define this to be a PowerShell script. So first we pull in the variable, which is the body of the email from the previous step. Next thing we do in the script is remove all excess stuff that we do not need. Empty spaces before and after several lines and entries. Also we will take out those headers and surrounding entries. We can add them ourselves to a clean file, right? SO this should give us a new string which only contains the XML entries for those servers with their state.

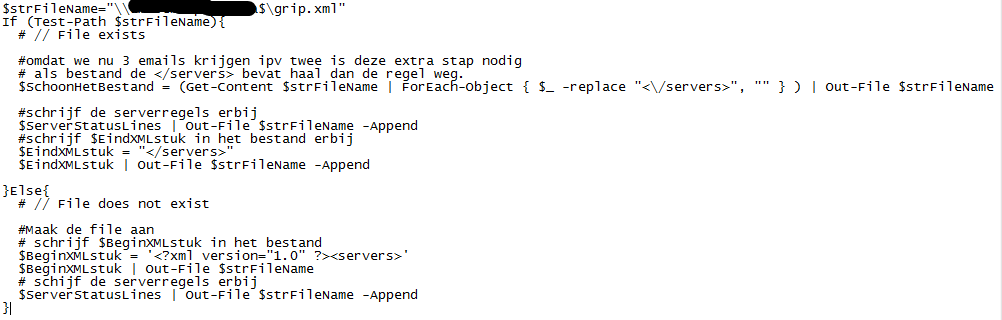

Next thing we needed to do is build in some tricks into this script. We know it is going to run three times and we need to stitch the contents together into one file.

Line of thought:

If there is no xml file there to write to this means this is the first time we run the script after the old file got renamed. So we need to create the xml file right now and add the headers to it. Next we add the body to it (server names with state).

If there is a file there with the correct name it means we are either in the second or third run. So what we do is simply write down the body (servers and state) and add the trailing end tag to it. This can be done on the second and third run. However, if this happens to be the third run, we will first check if that trailing tag is there and remove it. And next dump the body again and add the end tag.

So that part takes care of dumping the contents into the file following the above thought process (with the first thought coming at the end as the Else statement). Sorry for the Dutch comments, but you get the idea.

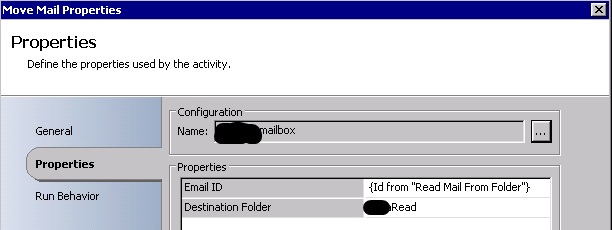

Move mail

Next we take the e-mails found by the Read mail from folder activity and move them to the other folder in the mailbox.

So, that is the whole runbook to get a few emails and merge them together so we can monitor the thing!

There is a separate runbook which cleans old files from that file share and which cleans old emails from that folder in the mailbox by the way. At least we can look a few days back what happened.

The monitoring part in SCOM

Now I am not going into all the details of this part. I had a reason to not link these entries directly to the monitored servers, or to write the xml file to those servers. I opted to create a watcher node (and its discovery from a registry entry on that machine). That watcher node is the server with that file share and the xml file on it.

Next I created watchers in a class, and discovered them through registry as well. Containing the names of the servers we wanted to check for in the XML.

For each watcher it runs a PowerShell monitor which goes into the XML file and finds its corresponding entry (server name). Next it picks up the State (which is a number) and we translate the 12 possible numbers into green/yellow/red type entries and place them into the property bag. That gets evaluated into the three states we know so well.

Next we could throw those watcher entries for each server and also some other entries onto a dashboard. We could see the state the other party saw from their monitoring system and the state we see from SCOM side on one dashboard for those servers and monitored entries. We have the hardware/OS layer with a few extras, and they have an OS layer and application layers which we could not pick up.

Conclusion

As you can see sometimes we run into situations where there is no other way to get monitoring data than through workarounds and the long way. This is not ideal. As you can understand there is dependencies left and right for this whole chain to work. If there is no other way then that is the way it has to be. Direct monitoring or direct connecting is preferred.

But this shows how you can get monitoring data from e-mails into SCOM, in this case through the use of Orchestrator and watchers because that was what we needed.

Shout-out to amongst others Cameron Fuller for making me write this post!

Happy monitoring!

Bob Cornelissen